Model Deployment, Observability & Governance

This guide walks you through the process of deploying, monitoring, and managing AI models in Nexastack.

By following these steps, you’ll learn how to seamlessly deploy a model from the marketplace, observe its performance, and ensure responsible governance across production environments.

Goal

Understand how to:

- Deploy AI models on your selected infrastructure (e.g., Kubernetes cluster).

- Observe real-time inference metrics and performance.

- Manage model governance and lifecycle post-deployment.

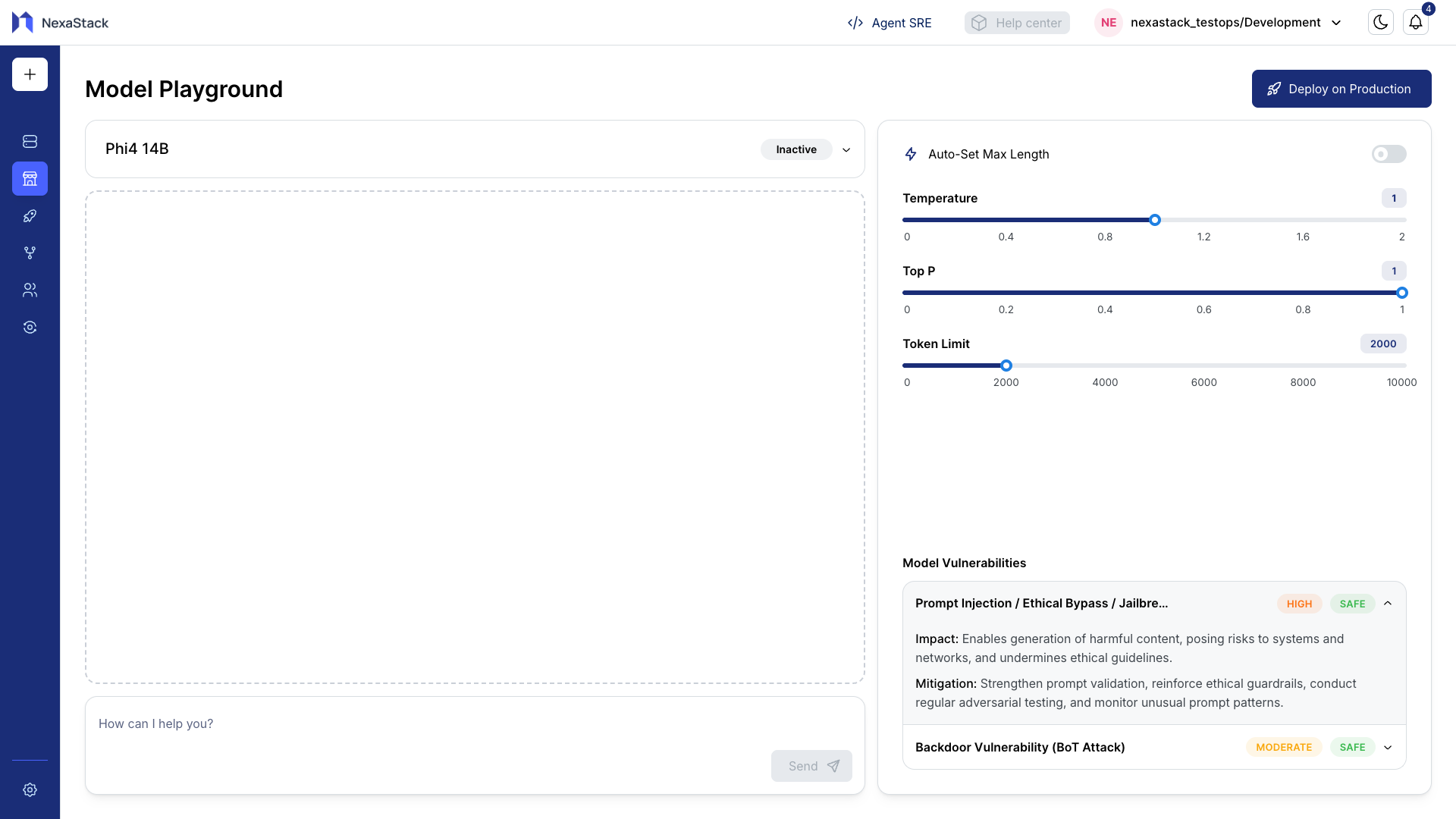

Step 1: Select and Deploy a Model

- Navigate to the Model Marketplace in Nexastack.

- Choose any available model (for example, Qwen-32B or Gemma-7B).

- Click on Deploy to Production.

- Select one of your available onboarded clusters from the dropdown list.

- Click Proceed to start the deployment.

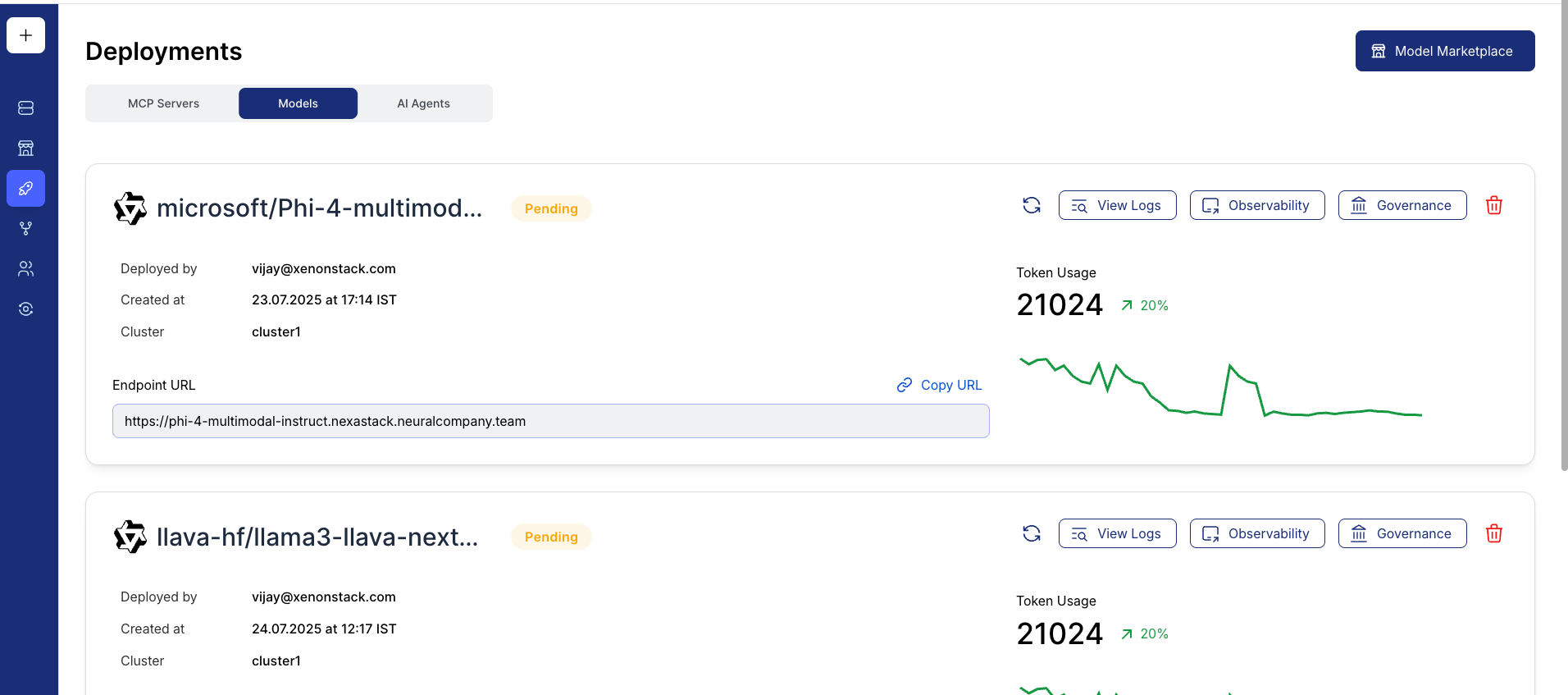

Step 2: Monitor Deployment Status

After initiating deployment:

-

The deployment will move through stages:

Pending → Running → Succeeded/Failed -

Once completed, you’ll see the Inference URL that allows you to access the deployed model endpoint.

You can use the Inference URL to connect the deployed model with your internal tools, pipelines, or API clients.

Step 3: Observability & Monitoring

Once your model is live, Nexastack provides built-in observability dashboards to track and analyze its performance.

Key Metrics Monitored:

- Latency — Response time per request

- Throughput — Requests handled per second

- Error Rate — Failed inference calls

- Resource Utilization — CPU and memory usage

- Model Health — Overall uptime and service stability

You can also integrate Prometheus and Grafana for custom visualization of inference metrics.

Step 4: Governance and Lifecycle Management

Governance ensures your deployed models are secure, compliant, and version-controlled.

Key Governance Features:

- Model Versioning: Track all deployed versions and roll back if needed.

- Access Control: Define user permissions for deployment and access.

- Audit Logs: Monitor changes, deployment activities, and configuration updates.

- Policy Enforcement: Apply compliance rules to ensure responsible AI usage.

You’ve successfully deployed, observed, and governed a model in Nexastack.

Your model is now production-ready with full observability and governance controls in place.